495/68 Friday, November 28, 2025

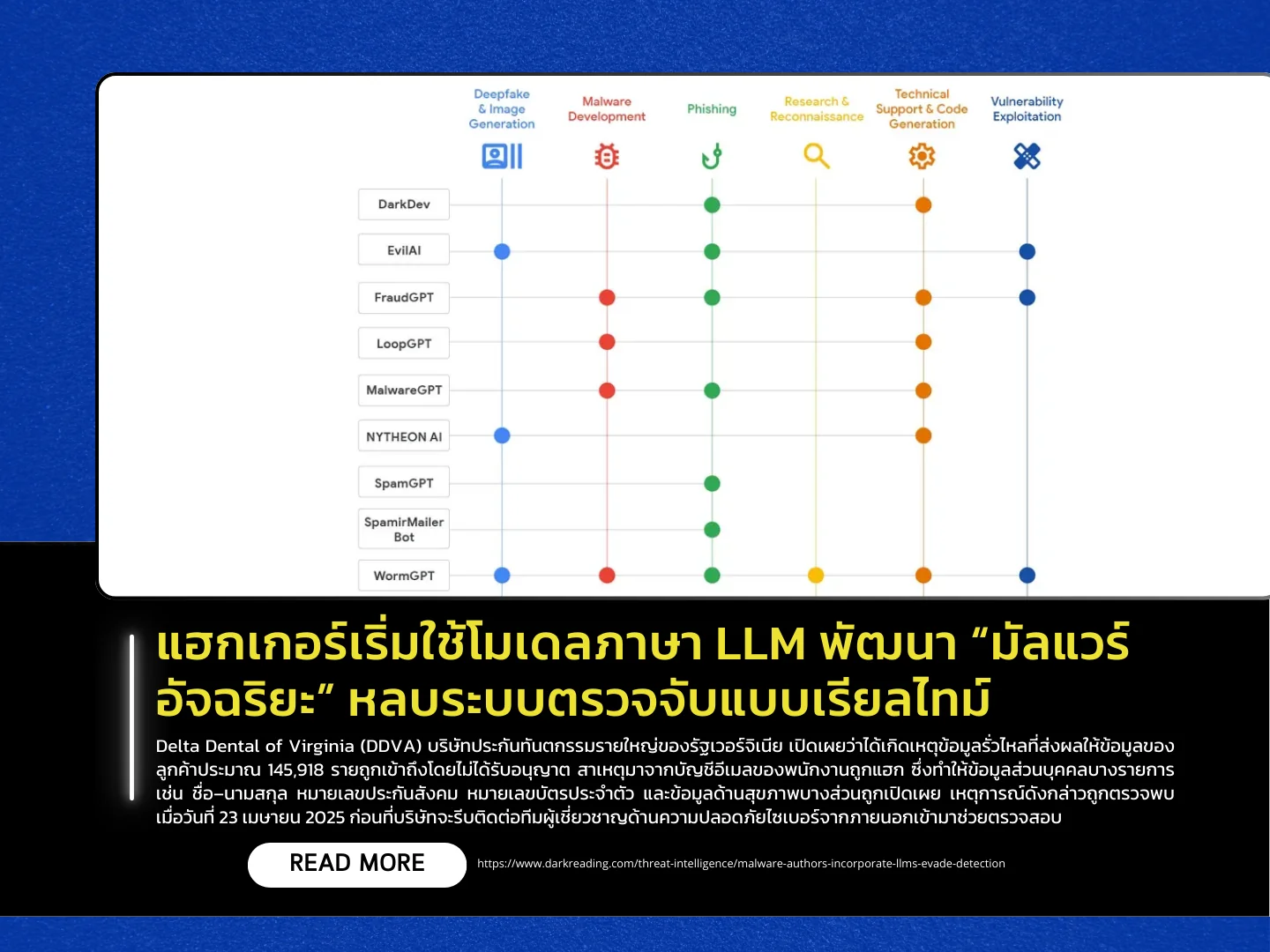

A new report from the Google Threat Intelligence Group (GTIG) reveals emerging techniques used by threat actors who are experimenting with embedding large language models (LLMs)-such as Google Gemini and Hugging Face models-directly into malware. The goal is to enable malware to rewrite its own code or generate new attack commands on the fly while running, allowing it to dynamically evade security tools and enabling even low-skill attackers to operate more sophisticated tools.

Experts note that LLM-powered malware can significantly boost the capabilities of advanced attackers while lowering the technical barrier for beginners. Although many of these LLM-enhanced malware samples are still prototypes, some have already been seen in real-world activity-such as FRUITSHELL, which embeds self-evasion logic, and QUIETVAULT, which uses AI assistance to locate and steal sensitive data from target systems. Analysts have also observed attackers abusing AI “jailbreak” tactics, convincing LLMs to generate harmful code by claiming it is needed for training or Capture-the-Flag (CTF) competitions-requests that would normally be blocked.

While the integration of LLMs into malware is not yet widespread and still limited by the need to connect to external AI services, the ability to mutate code in real time means this threat is expected to grow more sophisticated. Security experts recommend that organizations strengthen egress controls, closely monitor outbound traffic to external AI services, and deploy machine-learning-based behavioral detection instead of relying solely on traditional signature-based systems.

Source https://www.darkreading.com/threat-intelligence/malware-authors-incorporate-llms-evade-detection